Working with Data

Scikit-learn Basics

3 min read

Published Nov 17 2025, updated Nov 19 2025

Guide Sections

Guide Comments

Every machine learning project begins with data, and how you manage that data largely determines the success of your models.

In Scikit-learn, all algorithms expect data in numeric, tabular form, typically represented as:

X→ the feature matrix (inputs)y→ the target vector (labels or outputs)

This section covers how to:

- Load data (from built-in datasets, generated data, or external files)

- Explore and understand data structure

- Split data into training and testing sets

- Manage shapes, formats, and types so they align with Scikit-learn’s expectations

The Data Representation

Scikit-learn is designed to work primarily with:

- NumPy arrays

- pandas DataFrames

- SciPy sparse matrices

These formats are used because they are fast, memory-efficient, and easy to manipulate.

Convention:

Xis a 2D array of shape(n_samples, n_features)yis a 1D array of shape(n_samples,)

For example:

Many Scikit-learn utilities accept both NumPy arrays and pandas DataFrames:

Internally, Scikit-learn converts these to NumPy arrays for computation.

Built-in Datasets

Scikit-learn provides a variety of small, well-known datasets for practice and demonstration.

They load instantly and are ideal for testing algorithms or learning workflows.

Common datasets:

Dataset | Function | Description |

Iris | load_iris() | Flower classification (3 classes) |

Wine | load_wine() | Wine chemical properties by cultivar |

Breast Cancer | load_breast_cancer() | Binary classification |

Digits | load_digits() | Handwritten digits (0–9) |

Diabetes | load_diabetes() | Regression dataset |

California Housing | fetch_california_housing() | House price regression |

Example: Loading the Iris Dataset

Output:

Note:

load_iris()returns a Bunch object, a dictionary-like container with attributes (.data,.target,.feature_names, etc.)- Convert to pandas for easier exploration:

Generating Synthetic Data

For testing or demonstrations, you can generate synthetic datasets with controlled properties.

These functions are especially useful for experimenting with algorithms without needing external data.

Examples:

make_classification()- creates random classification problemsmake_regression()- creates regression problemsmake_blobs()- creates clustered datamake_moons(),make_circles()- create 2D toy datasets for visualisation

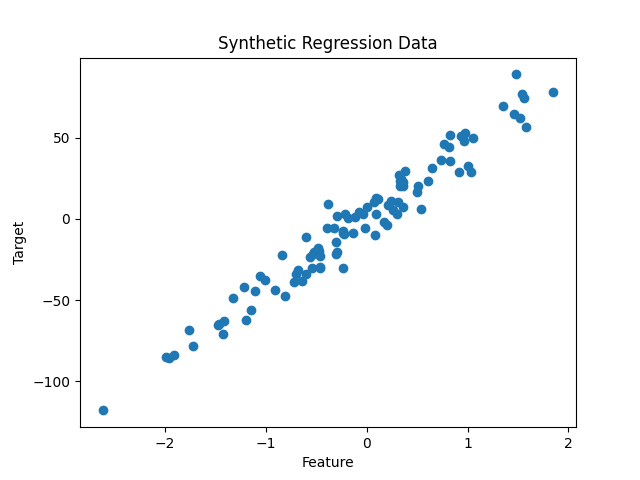

Example: Synthetic Regression Data

These synthetic datasets are invaluable for:

- Benchmarking algorithms

- Testing preprocessing pipelines

- Visualising model behaviour

Loading External Data

Most real-world data comes from files, typically CSVs, Excel sheets, or databases. Scikit-learn doesn’t directly handle file I/O, instead, you’ll use pandas for that step.

Example: Loading a CSV with pandas

Then, you can pass X and y directly to Scikit-learn models:

Note:

- Ensure all input data is numeric, models cannot handle text directly.

- Categorical features must be encoded.

Splitting Data for Training and Testing

When building a model, always split your dataset into training and testing subsets.

This allows you to train the model on one portion and evaluate it on unseen data to measure generalisation.

Scikit-learn provides train_test_split for this:

test_size=0.2- 20% test datarandom_state=42- ensures reproducibility- For classification tasks, use

stratify=yto maintain class balance (the proportion of each class inywill be (as closely as possible) the same in both the training and testing datasets):

Keep your test data untouched until final evaluation. Avoid using it for feature scaling or tuning.

Data Shapes and Sanity Checks

Before training, it’s important to verify that your data is shaped correctly.

Common Issue | Symptom | Solution |

Wrong shape | “Found array with dim 3” | Ensure |

Mismatched samples | “Input variables have inconsistent numbers of samples” | Check that |

Missing values | Model errors or NaNs in input | Handle via |

Quick checks:

Working with Feature Names

When using pandas DataFrames, feature names are preserved automatically through pipelines and transformations.

Example:

Output:

Keeping track of feature names makes interpretation and debugging easier, especially when working with multiple preprocessing steps.