What is Docker

Docker - Overview and how to use it

4 min read

Published Jul 13 2025

Guide Sections

Guide Comments

Docker Deep Dive book by Nigel Poulton

I highly recommend reading this book if you are just getting started with Docker. It goes in to a lot more detail around the background and history of how Docker works. ISBN: 978-1916585256.

Docker is an open-source platform designed to automate the deployment, scaling, and management of applications inside lightweight, portable containers. At its core, Docker allows developers and system administrators to package an application with all its dependencies—such as libraries, configuration files, and environment variables—into a standardized unit called a container.

This container can then run consistently on any system that supports Docker, regardless of the underlying hardware or operating system differences. By isolating the application environment, Docker ensures that "it works on my machine" becomes a reality on any machine running Docker.

Key Concepts:

- Images: Immutable snapshots containing the application and its dependencies.

- Containers: Running instances of these images, with their own isolated processes and file systems.

- Docker Engine: The runtime that manages container lifecycle, networking, and storage.

- Docker Hub: A public repository where images are shared.

How Docker Works

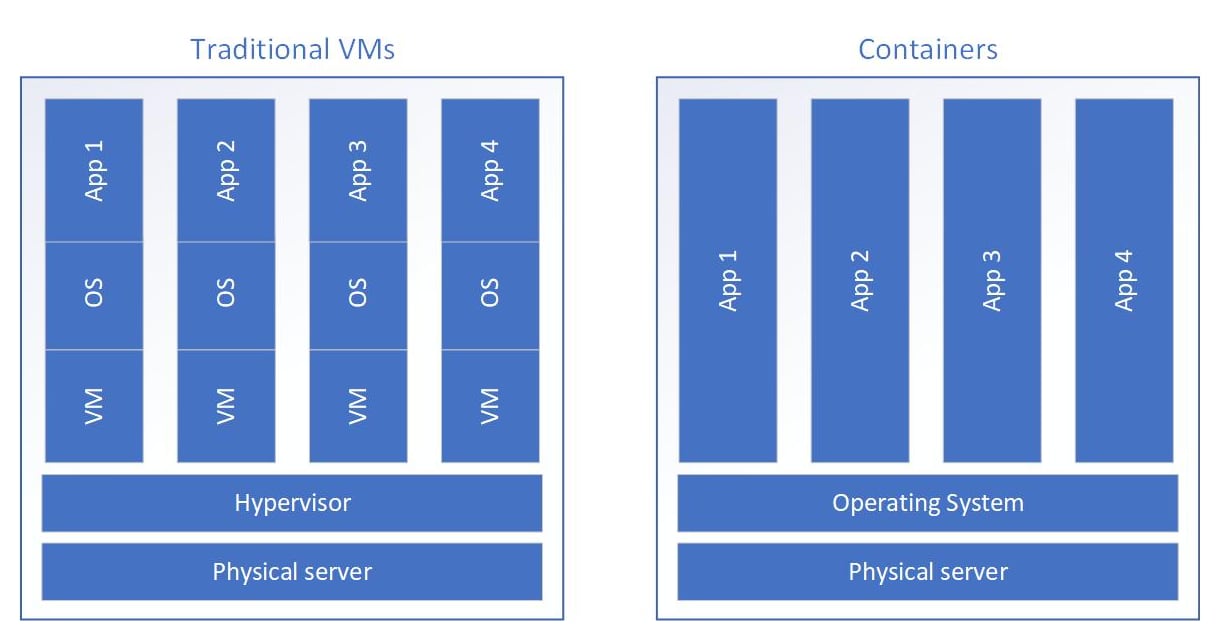

Docker uses OS-level virtualisation features—specifically Linux kernel features like namespaces and control groups (cgroups)—to provide process and resource isolation. Unlike traditional virtualisation, which emulates hardware to run entire guest operating systems, Docker containers share the host OS kernel but run isolated user spaces.

This design makes containers much lighter and faster to start than virtual machines.

Difference between VMs and Containers

Difference Between Docker Containers and Virtual Machines (VMs)

Understanding the difference between Docker containers and virtual machines is critical to appreciating Docker's advantages and use cases.

Aspect | Docker containers | Virtual machines |

Architecture | Share host OS kernel; isolated user space | Full guest OS runs on virtualized hardware |

Size | Lightweight, often tens to hundreds of MBs | Large, several GBs due to full OS images |

Startup Time | Typically seconds or less | Minutes, due to OS boot time |

Performance | Near-native, minimal overhead | Additional overhead from hypervisor |

Resource Usage | Efficient; many containers can run on one host | Heavy; each VM requires dedicated CPU/RAM |

Isolation Level | Process-level isolation; less secure than VMs | Stronger isolation; OS-level sandboxing |

Portability | Highly portable across systems with Docker engine | Portable as VM images but heavier to move |

Use Cases | Microservices, CI/CD, dev/test environments | Running multiple OS types, legacy app support |

Lightweight and Fast - Because containers share the host OS kernel and don’t need to boot a full guest OS, they consume less disk space, memory, and CPU compared to VMs. Starting a container typically takes milliseconds or a few seconds, while booting a VM can take minutes.

Isolation - Docker containers isolate applications at the process level. They have their own network stack, file system (via copy-on-write layers), and process space. However, since they share the OS kernel, this isolation is less strong compared to VMs, which have completely separated OS kernels.

Portability - Docker containers can run on any system with the Docker engine installed, regardless of differences in hardware or underlying OS (Linux containers on Linux hosts; Windows containers on Windows hosts). This is ideal for moving applications between development, testing, and production environments seamlessly.

Why Use Docker?

Simplified Deployment - Docker packages everything an application needs into one image. This eliminates the classic "it works on my machine" problem, since the container always runs with the same dependencies and environment.

Scalability and Microservices - Containers make it easy to split large applications into smaller, loosely coupled microservices. Each microservice runs in its own container, which can be started, stopped, or scaled independently.

Resource Efficiency - Because containers share the OS kernel, you can run many more containers on a single physical machine than you could VMs, optimizing hardware usage and reducing costs.

Developer Productivity - Developers can run applications and their dependencies locally with a single command, matching production environments exactly, reducing bugs and configuration issues.

How Containers Manage Storage, Networking, and Processes

Docker containers have isolated file systems, which are layered on top of the base image using a copy-on-write mechanism. Changes made inside a running container don’t affect the underlying image and can be discarded or committed as new images.

Networking is managed through virtual networks and port mappings. Containers expose ports internally (for example, port 3000), but Docker allows each container to map those ports to different host ports. This enables multiple containers running the same service to coexist on the same host.

Each container runs as an isolated process on the host OS. You can limit resources like CPU and memory per container, ensuring that containers don’t interfere with each other’s performance.